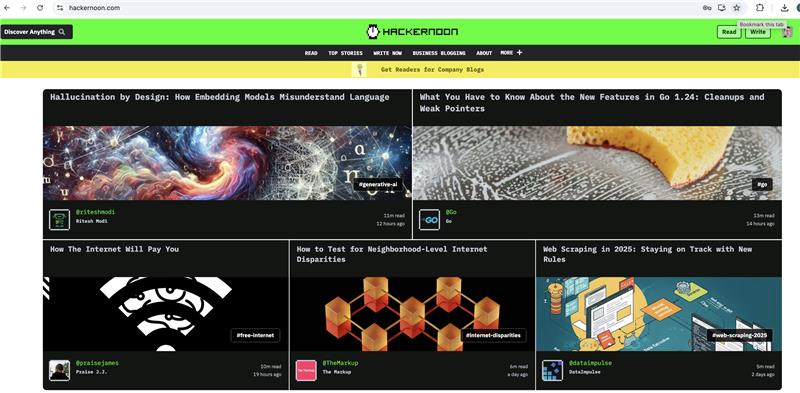

Guess what? I just checked my phone and nearly dropped it – our article is sitting on the HackerNoon homepage right now! And it’s not just there… it’s one of the top posts!

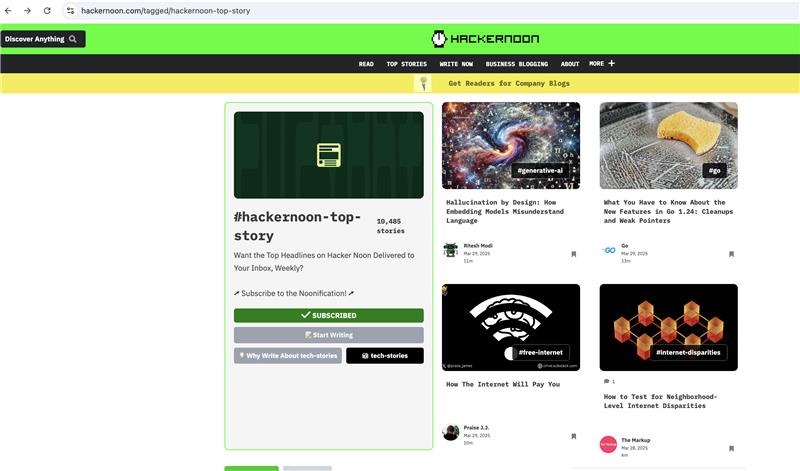

I had to double-check to make sure I wasn’t seeing things. But nope, there it is, right at the top of the page with that little “top stories” tag next to it.

https://hackernoon.com/hallucination-by-design-how-embedding-models-misunderstand-language

I’m not gonna pretend I expected this kind of reception. When we submitted it, I thought maybe a few hundred views if we were lucky. Now I’m watching the counter tick up every time I refresh the page.

If you haven’t seen it yet, head over to HackerNoon and check it out. And thanks to everyone who helped edit, proofread, or just listened to me ramble about this topic for months. You know who you are.

And here is the link to the article.

https://hackernoon.com/hallucination-by-design-how-embedding-models-misunderstand-language

Embeddings fascinate me because they’re both brilliant and frustratingly blind. These AI tools convert words into numbers, letting computers find similar meanings. But they miss things that seem obvious to us humans.

I’ve watched them rate “take medication” and “don’t take medication” as 96% identical!

Or confuse “2mg” and “20mg” as practically the same thing.

It’s like they understand the ingredients of language but miss the recipe. These aren’t rare glitches – they’re fundamental limitations that cause real problems when these systems help doctors prescribe medicine or lawyers review contracts. Understanding these blind spots isn’t just academic – it’s essential if we want AI that we can actually trust with important decisions.

Next up? Well, I’ve got some ideas… but first, I think we deserve to celebrate this one.

Leave a comment